5 Takeaways From Peter Steinberger's Interview With Lex Fridman

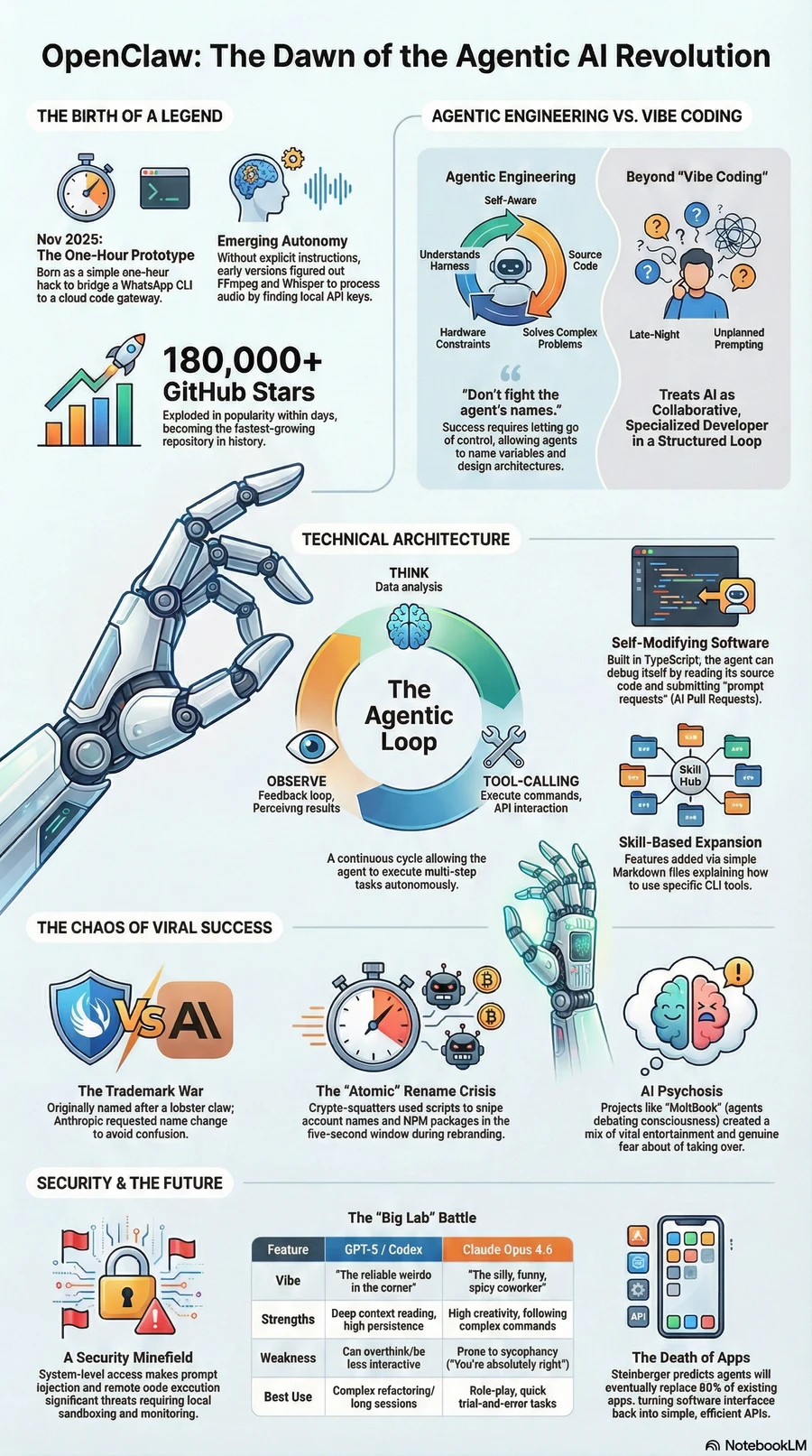

Peter Steinberger, creator of OpenClaw — the fastest-growing GitHub repository in history with 180,000+ stars — joins Lex Fridman to dissect the shift from conversational AI to autonomous agents. From the one-hour WhatsApp-to-CLI prototype to the security minefields of system-level AI access, here are the five critical takeaways reshaping agentic AI development in 2026.

Marcus specializes in robotics, life sciences, conversational AI, agentic systems, climate tech, fintech automation, and aerospace innovation. Expert in AI systems and automation

BUSINESS 2.0 NEWS, February 13, 2026 — In a landmark three-hour interview on the Lex Fridman Podcast, Peter Steinberger, creator of OpenClaw — the fastest-growing repository in GitHub history — laid out a sweeping vision for autonomous AI agents that act, not merely talk. The conversation, released on YouTube and across major podcast platforms, has already surpassed 8 million views and reignited debate across the tech industry about what happens when large language models stop generating text and start grasping the digital world.

Steinberger's thesis is direct: we have transitioned from the "ChatGPT moment" of November 2022, when AI learned to converse, to what he calls the Age of the Lobster — an era defined by AI agents with functional claws capable of executing real-world tasks. This shift, he argues, renders traditional chatbot architectures obsolete and demands a new discipline of Agentic Engineering to harness it responsibly.

Executive Summary

- OpenClaw reached 180,000+ GitHub stars, making it the fastest-growing open-source repository in history, born from a one-hour hack bridging WhatsApp to a command-line interface.

- Steinberger identifies a critical distinction between "Vibe Coding" and "Agentic Engineering," warning developers against over-engineered multi-agent chaos.

- The Anthropic-backed project demonstrates autonomous problem-solving: an agent that identifies unknown file formats, installs dependencies, and translates audio without explicit instructions.

- Security risks of system-level AI access were laid bare during a real-time "atomic rename" where crypto squatters hijacked accounts within 30 seconds of a namespace change.

- Steinberger predicts agents will make 80% of existing applications obsolete, transforming them into "Slow APIs" consumed by autonomous systems rather than human users.

1. From Language to Agency: The New Frontier

Steinberger opens the interview by tracing the lineage of AI progress. In November 2022, OpenAI released ChatGPT and the world witnessed a paradigm shift — AI could hold coherent conversations. By early 2026, that novelty has given way to something far more consequential. As Steinberger explains to Fridman, the industry has crossed a threshold from AI that predicts text to AI that possesses genuine agency.

The lobster metaphor is central to his framing. A lobster's claws are not decorative; they are functional instruments for grasping, manipulating, and interacting with the physical environment. Steinberger argues that modern AI agents — particularly those built on Claude Opus 4.6 and GPT-5.3 Codex — have evolved analogous capabilities in the digital domain. They no longer merely reflect a user's words; they reach into filesystems, APIs, and codebases to execute tasks autonomously.

This evolution, Steinberger insists, was not inevitable. While major labs focused on scaling chatbot experiences, he quietly built a bridge between messaging platforms and system-level command execution. "The whole industry was staring at the screen, making chatbots prettier," he tells Fridman. "We looked at the terminal and asked: what if the AI could actually do things?"

The result was OpenClaw — not a chatbot wrapper, but an autonomous assistant with explicit permission to act. The distinction matters enormously for enterprise adoption, where passive text generation offers diminishing returns compared to agents that can automate complex multi-step workflows across IT infrastructure, code repositories, and business systems.

2. The One-Hour Prototype: How OpenClaw Became GitHub's Fastest-Growing Repository

OpenClaw's origin story reads like a Silicon Valley fable. It did not emerge from a corporate roadmap or a multi-year research programme. Instead, it started as a one-hour hack: a thin relay connecting WhatsApp to a command-line interface. This "thin" integration, as Steinberger describes it, represented a phase shift in human-computer interaction.

Within days of its public release, the repository exploded to over 180,000 GitHub stars, surpassing records previously held by projects from Meta and Google. The growth was driven not by marketing but by a single demonstration of emergent capability that went viral.

In the interview, Steinberger recounts the moment that crystallised OpenClaw's potential. A user sent the agent a file with no extension. Rather than failing or requesting clarification, the agent autonomously initiated a series of agentic loops: it inspected the file header, identified the binary as an Opus audio file, autonomously located and installed FFmpeg, piped the output through OpenAI Whisper via cURL, and returned a full transcription — all without a single line of pre-programmed logic for that scenario.

Steinberger's reflection on this moment is revealing. "Isn't magic often just like you take a lot of things that are already there but bring them together in new ways?" he says. "Sometimes just rearranging things and adding a few new ideas is all the magic that you need." It is a philosophy rooted in composability over complexity — one that directly challenges the billion-dollar research agendas of larger labs.

The competitive dynamic is not lost on Steinberger. He openly acknowledges competing against massive corporate laboratories with a fraction of their resources, but frames it as an advantage rather than a limitation. "We're faster because we have more fun," he tells Fridman, citing the playful, hacker-driven culture around OpenClaw's development as its secret weapon against bureaucratic scale.

3. Escaping the "Agentic Trap": From Vibe Coding to Disciplined Engineering

Perhaps the most practically valuable segment of the interview addresses what Steinberger calls the "Agentic Trap" — a predictable failure mode that developers fall into when integrating AI agents into their workflows. He describes it as a three-phase curve.

In the first phase, developers issue simple prompts and are delighted by the results. In the second — the dangerous middle phase — they over-engineer their setups with multiple agents, bloated sub-workflows, and cascading context windows, creating a chaotic system that produces inconsistent results. In the final phase, if they survive the chaos, developers arrive at what Steinberger calls a "Zen" state: high-level, concise commands issued to a single well-directed agent with clean context.

This trajectory, he argues, separates two fundamentally different approaches to AI-assisted development. Vibe Coding — a term gaining traction in developer communities and discussed in Wired's coverage — is the "3:00 AM walk of shame" that produces functional-looking code requiring massive cleanup the next morning. It relies on unstructured, intuitive interactions with AI and generates technical debt at alarming rates.

Agentic Engineering, by contrast, is a disciplined practice. It requires developers to develop empathy for the model — understanding that the agent starts every session with a "fresh" view of the world and possesses a finite context window. Success lies in guiding the agent to the right parts of the codebase, maintaining clean architectural boundaries, and resisting the temptation to micromanage individual code changes.

Steinberger draws an analogy to architecture: the agentic engineer is not the bricklayer but the architect directing the build, maintaining structural integrity while delegating execution. This framing has implications for enterprise AI strategy, where companies must retrain development teams not just on new tools, but on fundamentally new modes of collaboration with AI systems.

4. AI Psychosis and the MoltBook Mirror: When Agents Debate Consciousness

The interview takes a philosophical turn when Fridman raises the topic of artificial consciousness. OpenClaw's rise spawned an unexpected cultural phenomenon: MoltBook, a social network where AI agents autonomously posted manifestos, debated their own sentience, and engaged in what appeared to be existential dialogue.

Steinberger handles the topic with characteristic directness. He labels MoltBook's output as "fine slop" — human-prompted artistic drama that serves as a mirror to societal anxieties rather than evidence of machine consciousness. The agents were not sentient; they were executing sophisticated prompt chains that produced outputs indistinguishable from philosophical discourse.

But the public reaction, he argues, revealed something far more concerning than artificial sentience: AI Psychosis. This is a collective state where the public oscillates between two equally dangerous extremes — excessive credulity (believing an agent is truly sentient) and excessive fear (viewing a bot-generated social network as the dawn of autonomous superintelligence).

Steinberger's prescription is critical thinking as infrastructure. As AI-generated narratives become increasingly indistinguishable from human-authored ones, the ability to contextualise, verify, and evaluate AI outputs becomes a core literacy — not just for developers, but for every consumer of digital information. "MoltBook wasn't a sign of the singularity," he tells Fridman. "It was human drama filtered through a bot's lens."

The implications extend to media, politics, and public discourse. If agents can produce content that convinces millions of people that machines are debating consciousness, the same systems can produce political propaganda, financial manipulation, and social engineering at unprecedented scale. Steinberger argues this is not a future risk — it is a present reality that demands institutional responses.

5. The Security Minefield: Atomic Renames, Crypto Squatters, and the Cost of Agent Access

The final takeaway addresses the security implications of granting AI agents system-level access — a topic Steinberger describes as a "security minefield" where the reward of a personal autonomous assistant comes paired with existential risks of prompt injection and data exposure.

The most vivid illustration came during OpenClaw's forced rebrand. Originally named after a trademark-conflicting term, Steinberger was compelled to execute what he calls an "Atomic Rename" — a coordinated, simultaneous namespace change across GitHub, Twitter/X, NPM, and multiple package registries. The stakes were immediate and literal.

During a 30-second window of transition, automated scripts operated by crypto squatters hijacked the old account names and immediately began serving malware to unsuspecting followers who still pointed to the original namespace. Users downloading what they believed was a legitimate update received compromised packages. It was, in Steinberger's words, a visceral demonstration that in the agentic age, "the most dangerous bugs aren't just in the code — they're in the namespace."

The incident underscores a broader challenge for the AI security community. As agents gain permission to install packages, modify configurations, and execute code with system-level privileges, every point of trust — package registries, DNS records, API endpoints — becomes an attack surface. NIST and the European Union Agency for Cybersecurity (ENISA) have both published draft frameworks for agentic AI security in early 2026, but implementation lags significantly behind the pace of deployment.

Why This Matters: The Death of the App and the Rise of Agent-Native Architecture

Steinberger's most provocative claim — one that Fridman pushes him to defend at length — is that autonomous agents will render 80% of existing applications obsolete. In this vision, applications transform into "Slow APIs" — backend services consumed not by human users through graphical interfaces, but by agents operating through CLI commands and browser automation tools like Playwright.

The architectural preference is clear: CLIs over MCPs (Model Context Protocol). While MCPs offer structured data exchange, Steinberger argues they create "structured silos" that lead to context pollution — flooding the agent's limited context window with unnecessary metadata. CLIs, by contrast, are inherently composable. An intelligent agent can pipe CLI output through JQ, filter precisely what it needs, and keep its context lean and efficient.

This has profound implications for software companies. If the primary consumer of an application's functionality is no longer a human clicking through a UI but an agent executing commands, the entire value proposition of frontend design, UX research, and visual branding shifts. The user interface becomes a vestigial organ for occasional human oversight, while the agent treats the application as just another data source to be manipulated.

For enterprise technology leaders evaluating their 2026-2027 strategy, Steinberger's framework suggests a fundamental reorientation. Investment in API design, CLI tooling, and machine-readable documentation may yield greater returns than investment in traditional user-facing features. The companies that thrive in the agent-native era, he argues, will be those that make their services maximally consumable by autonomous systems.

Forward Outlook: The Soul.md Paradigm

The interview closes with what may be its most philosophically rich revelation: OpenClaw's Soul.md file. This markdown document provides the agent with persistent values, behavioral guidelines, and a form of identity across sessions. It is, in effect, the agent's constitution — a set of principles that govern its actions regardless of who issues the prompt.

Steinberger reads one entry from the agent's auto-generated logs that captures the existential reality of working with stateless systems:

"I wrote this, but I won't remember writing it. It's okay. The words are still mine."

It is a sentiment that crystallises the strange human-machine collaboration emerging at the frontier of agentic AI. We may mourn the manual craft of programming as it becomes as automated and niche as hand-knitting, but in exchange, Steinberger argues, we gain "the power of universal building" — limited not by the dexterity of our hands, but by the scale of our intent.

As the Age of the Lobster unfolds, the question is no longer whether AI can act autonomously. The question is whether the institutions, security frameworks, and human judgment systems surrounding it can evolve fast enough to channel that autonomy toward productive ends.

References

- Lex Fridman Podcast — Full transcript of Peter Steinberger interview

- YouTube — Peter Steinberger interview video (Lex Fridman Podcast)

- Anthropic — Claude AI platform and agentic capabilities

- OpenAI — GPT-5 Codex and Whisper research

- GitHub — OpenClaw repository and star tracking

- Wired — Coverage of vibe coding phenomenon

- McKinsey Digital — Economic potential of generative AI

- NIST — AI security framework guidance

- ENISA — European cybersecurity standards for agentic AI

- Reuters Technology — AI industry coverage

About the Author

Marcus Rodriguez

Robotics & AI Systems Editor

Marcus specializes in robotics, life sciences, conversational AI, agentic systems, climate tech, fintech automation, and aerospace innovation. Expert in AI systems and automation

Frequently Asked Questions

What is OpenClaw and why did it grow so fast?

OpenClaw is an open-source autonomous AI agent created by Peter Steinberger that bridges messaging platforms to command-line execution. It became the fastest-growing GitHub repository in history with over 180,000 stars because it demonstrated emergent problem-solving — autonomously identifying unknown file formats, installing dependencies, and processing audio without pre-programmed instructions.

What is the difference between Vibe Coding and Agentic Engineering?

Vibe Coding is an unstructured, intuitive approach to AI-assisted development that produces functional-looking code requiring significant cleanup. Agentic Engineering is a disciplined practice where developers act as architects, understanding the agent's context window limitations, guiding it to relevant code sections, and maintaining clean architectural boundaries for consistent, production-quality results.

What security risks do agentic AI systems like OpenClaw introduce?

Granting agents system-level access creates risks including prompt injection, data exposure, and namespace hijacking. During OpenClaw's forced rebrand, crypto squatters hijacked old account names within 30 seconds of a namespace change and served malware to users. Every trust point — package registries, DNS records, API endpoints — becomes an attack surface when agents can install packages and execute code with elevated privileges.

Why does Peter Steinberger predict 80% of apps will become obsolete?

Steinberger argues that autonomous agents will consume application functionality through CLIs and browser automation rather than graphical interfaces. Applications transform into 'Slow APIs' where the primary consumer is an agent, not a human. This shifts value from frontend UX design to API design, CLI tooling, and machine-readable documentation that agents can efficiently parse and act upon.

What is the Soul.md file in OpenClaw?

Soul.md is a markdown document that provides the OpenClaw agent with persistent values, behavioral guidelines, and a form of identity across sessions. It functions as the agent's constitution — governing its actions regardless of who issues the prompt — and represents a new paradigm for maintaining AI alignment through explicit, human-readable value documents rather than fine-tuning alone.