AI Film Making Market Trends: Generative Tools Reshape Production Timelines and Budgets

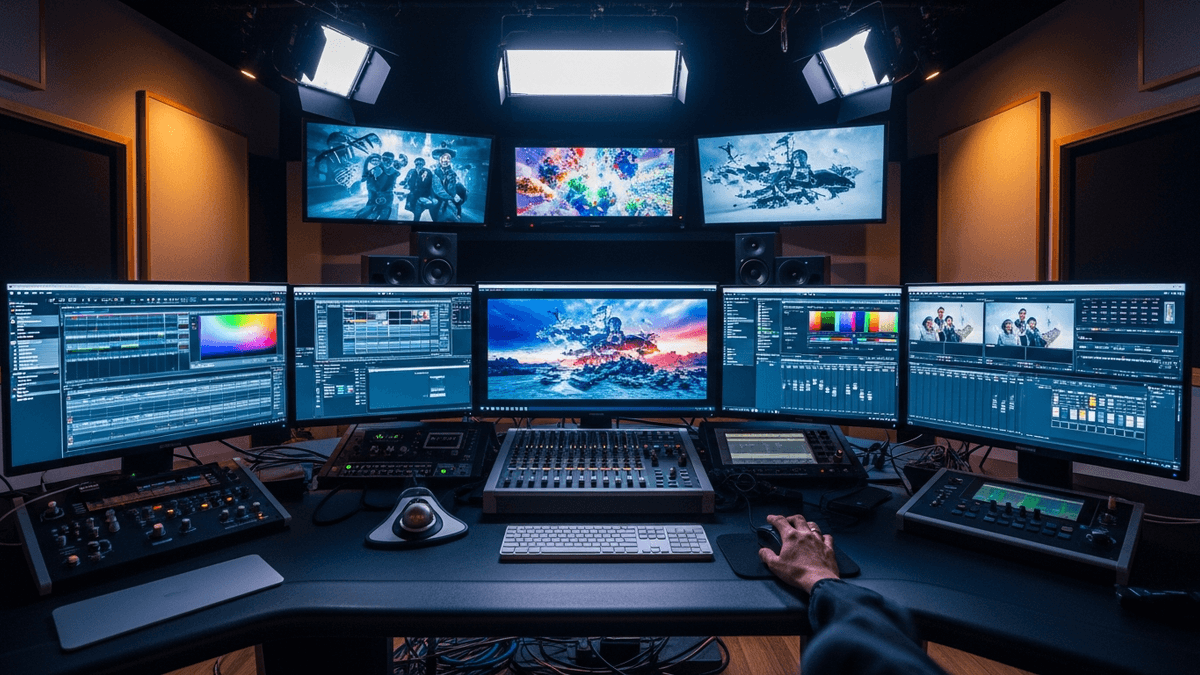

From text-to-video models to AI-native post-production, filmmaking is entering a software-first era. As capital piles in and workflows reorganize, studios are balancing speed gains with rights, ethics, and new labor rules.

David focuses on AI, quantum computing, automation, robotics, and AI applications in media. Expert in next-generation computing technologies.

The New Studio Stack: Text-to-Video Goes from Demo to Daily Tool

Generative AI is moving from eye-popping demos to practical production gear. Text-to-video systems such as OpenAI Sora and Runway Gen-3 are now being tested for previsualization, animatics, and concept teasers, compressing tasks that once took weeks into days or hours. Early adopters report faster iteration loops on story beats, camera moves, and look development, using AI clips as scaffolding rather than final frames.

The shift isn’t just about speed; it’s about optionality. Directors can explore multiple styles—surrealist, photoreal, or stylized CG—within the same production window, guiding AI outputs with reference stills, motion prompts, and storyboard beats. While most AI-generated shots still require cleanup, the creative bandwidth unlocked at the earliest stages of production is reshaping how teams plan shoots, allocate budgets, and pitch concepts.

Market Trends and Capital Flows

The commercial tailwinds are growing. The AI in media and entertainment segment is expected to approach $100 billion by 2031, industry reports show, as content owners and toolmakers push beyond point solutions toward end-to-end pipelines. That momentum is fueled by featured breakthroughs in video generation, multimodal editing, and asset management that slot into existing production stacks rather than replace them outright.

Investor interest has crystallized: Runway raised $141 million to scale its model training and enterprise features, while Nvidia unveiled its Blackwell-generation GPUs to accelerate training and inference for video models. Companies including Adobe and Nvidia are embedding generative capabilities deeper into creative suites and collaboration layers, aiming to reduce render times, simplify asset interchange, and keep metadata—and rights—intact through the pipeline. This builds on broader AI Film Making trends.

From Previs to Post: How Workflows Are Changing

In post-production, AI is becoming a co-editor rather than a replacement. Adobe is integrating generative video and object removal directly into Premiere Pro, letting editors prompt for scene extensions, plate cleanups, or B-roll variations without leaving the timeline—capabilities that were previewed with third-party model support, according to The Verge. These features don’t eliminate VFX, but they reduce the number of handoffs for routine tasks, tightening feedback loops among editors, VFX artists, and producers.

On set and in virtual production, real-time engines are the connective tissue. Epic Games is expanding its MetaHuman and Unreal Engine toolchain for facial capture, lighting, and camera tracking, which pairs neatly with AI-assisted mocap, dubbing, and texture generation. Meanwhile, Nvidia Omniverse is evolving into a hub for asset interoperability—USD first—so AI-generated elements can be versioned, simulated, and lit alongside practical and CG plates without losing lineage. Startups such as Runway increasingly expose hooks into these ecosystems, making it easier for studios to treat AI shots as first-class assets rather than experiments.

Risks, Rights, and Regulation

Policy is catching up to practice. Guild contracts and studio policies now codify where AI can and cannot be used in writing and production, with the Writers Guild of America establishing baseline protections around authorship and approval in 2023, as documented by the WGA. The emerging rule of thumb: AI may assist, but humans remain the credited authors and decision-makers.

Copyright and data provenance remain the sector’s thorniest issues. Companies including Adobe are pushing standards for content credentials and provenance tags to track how assets are created, edited, and published—critical for licensing and downstream monetization. On the infrastructure side, the energy cost of training and running video models is in focus; next-gen hardware from Nvidia promises better performance per watt, but studios are still weighing cloud versus on-prem trade-offs as workloads spike.

About the Author

David Kim

AI & Quantum Computing Editor

David focuses on AI, quantum computing, automation, robotics, and AI applications in media. Expert in next-generation computing technologies.

Frequently Asked Questions

What is the projected market size for AI Film Making tools and services?

Analysts expect AI for media and entertainment to approach $100 billion by 2031, reflecting rapid adoption across pre-production, virtual production, and post. The expansion spans both standalone tools and embedded features inside established creative suites, according to industry research.

Which platforms are leading in AI-driven video generation and editing?

Text-to-video systems from [OpenAI](https://openai.com/sora) and [Runway](https://runwayml.com) are setting the pace for concept and previs work. In post, [Adobe](https://www.adobe.com) is weaving generative features into Premiere Pro, while real-time production relies heavily on [Epic Games](https://www.unrealengine.com) Unreal Engine and [Nvidia](https://www.nvidia.com/en-us/omniverse/) Omniverse for asset interoperability.

How are AI tools changing production timelines and budgets?

They shorten iteration cycles on storyboards, animatics, and plate cleanups, allowing teams to test more ideas before committing to costly shoots. While AI won’t eliminate VFX or editorial labor, it reduces routine handoffs and helps producers reallocate time and budget to higher-impact creative work.

What are the main risks and governance issues with AI in filmmaking?

Authorship, consent, and copyright are top concerns. Guild agreements now establish boundaries for AI usage in writing and production, and studios are adopting provenance standards to track asset history, even as legal frameworks and best practices continue to evolve.

What should studios watch in the next 12 months?

Expect deeper integration of generative features into editing suites and real-time engines, along with faster hardware cycles that cut inference costs. Adoption will likely accelerate where AI tools plug cleanly into existing pipelines and preserve metadata, licensing, and creative control.