AI Film Making Outlook 2026: Industry Signals and Vendor Advances

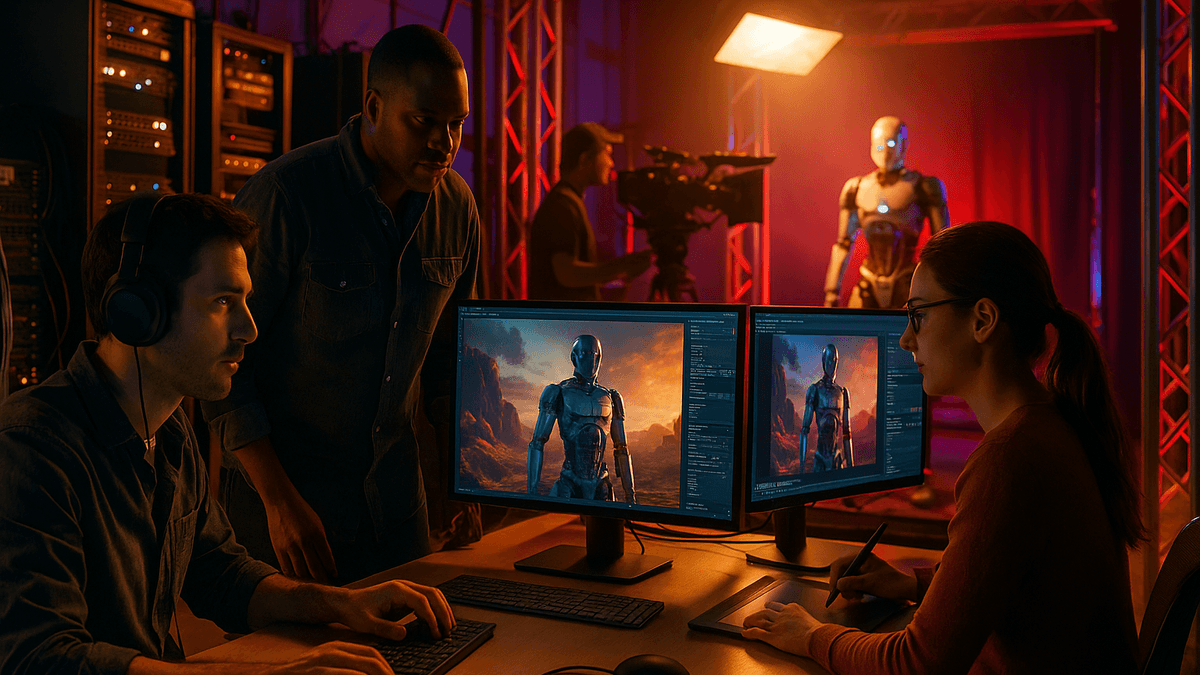

AI-led video creation is moving from pilot projects into core pipelines as studios and enterprises standardize on multimodal tools and cloud infrastructure. Leading vendors refine rights, safety, and workflow integrations while CIOs assess governance and cost models for production-grade deployments.

Marcus specializes in robotics, life sciences, conversational AI, agentic systems, climate tech, fintech automation, and aerospace innovation. Expert in AI systems and automation

LONDON — February 9, 2026 — AI-driven film and video production is shifting from experimentation to structured deployment, as enterprise buyers and studios evaluate multimodal tools from providers including Adobe, NVIDIA, OpenAI, and Runway for storyboarding, pre-visualization, and post-production workflows, according to current industry briefings and vendor disclosures that emphasize quality, rights management, and integration with existing pipelines.

Executive Summary

- Enterprises prioritize rights-cleared models, indemnification, and auditability as key procurement criteria for AI video tools, per policy statements from Adobe and licensing frameworks from Shutterstock.

- Multimodal models and cloud GPUs from NVIDIA, accessed via AWS and Microsoft Azure, underpin scalable rendering, fine-tuning, and serving for AI film making.

- Studios and brands test text-to-video and AI editing alongside established tools like DaVinci Resolve and Autodesk Flame, focusing on interoperability and version control.

- Governance frameworks integrate generative disclosures, provenance (e.g., C2PA), and model risk management aligned to guidance summarized by Gartner.

Key Takeaways

- AI-native pre-visualization and automated post-production workflows are moving into production pilots across creative industries, with cloud economics and rights policies shaping vendor selection, per enterprise briefings from Microsoft and Amazon.

- Best-in-class deployments favor platform-neutral stacks that connect model providers such as OpenAI and Google with established editorial and VFX suites from Adobe and Autodesk.

- Risk management concentrates on training data provenance and generative labeling, aligning with industry initiatives like C2PA and enterprise policy playbooks from Forrester.

- Studios emphasize talent augmentation, not replacement, with AI assistants for rotoscoping, rough cuts, and asset generation, as described in analyst outlooks from IDC.

| Trend | Enterprise Priority | Implementation Window | Representative Vendors |

|---|---|---|---|

| Text-to-Video Diffusion | High | Near-term pilots | Runway, OpenAI, Google |

| Generative Editing in NLEs | High | Near-term | Adobe, Blackmagic |

| 3D Asset & Scene Generation | Medium | Mid-term | NVIDIA Omniverse, Autodesk |

| Digital Humans & Avatars | Medium | Mid-term | Synthesia, NVIDIA ACE |

| Provenance & Watermarking | High | Near-term | C2PA, Adobe |

| Cloud-Scale Rendering & Inference | High | Near-term | AWS Deadline, Azure Media |

Analysis: Implementation, Governance, and ROI

Based on hands-on evaluations by enterprise technology teams and integrators cited by Gartner, the most durable deployments use layered safeguards: rights-cleared training sources (as championed by Adobe Firefly), content credentials via C2PA, and change management embedded in creative review cycles. According to Forrester, integrating AI assistants into existing NLE timelines enables near-term time savings on rotoscoping, rough cuts, and localization. "Generative AI has become a practical part of content creation workflows, and acceleration is coming from advances in both models and systems," said Jensen Huang, CEO of NVIDIA, in prepared remarks tied to industry keynotes and January 2026 briefings. According to press materials from NVIDIA, the company emphasizes end-to-end pipelines that connect model development to real-time engines via Omniverse and inferencing stacks. "Our focus is on commercially safe, rights-aware generation and tight integration with professional tools," said Scott Belsky, Chief Strategy Officer and EVP of Design at Adobe, per company commentary highlighted in corporate announcements and media briefings. Per the company’s content credential resources at Adobe, enterprise policies now require provenance and disclosure for generative assets. "Enterprises are transitioning from isolated pilots to cross-team deployments, emphasizing governance and measurable outcomes," noted Avivah Litan, Distinguished VP Analyst at Gartner, in research commentary discussing January 2026 adoption patterns. Gartner’s guidance underscores the need to match model fit to creative objectives while maintaining consistent approval workflows for broadcast and theatrical content. Company Positions and Differentiators Text-to-video and generative editing providers such as Runway, Pika, and OpenAI focus on controllability, camera motion, and style transfer while investing in tools for enterprise security and audit. Platform players like Google and Microsoft integrate model access with content management, identity, and rights policies at scale, as documented in product literature and analyst notes. Creative software suites from Adobe, Autodesk, and Blackmagic Design emphasize non-destructive workflows and human-in-the-loop editing, maintaining interoperability with asset management systems and collaboration tools. Cloud providers AWS and Azure offer reference architectures for rendering, inference, and storage that align with procurement, security, and cost allocation structures in large studios and brands, aligning with AI Film Making coverage and industry best practices.Competitive Landscape

| Company | Core Capability | Target Users | 2026 Focus |

|---|---|---|---|

| Adobe | Generative editing, credentials | Studios, agencies | Rights-aware workflows & NLE integration |

| NVIDIA | GPUs, Omniverse, avatars | Model builders, studios | End-to-end pipelines & real-time graphics |

| Runway | Text-to-video | Creators, teams | Controllability & enterprise endpoints |

| Pika | Video generation | Creators | Camera motion & style tools |

| Autodesk | VFX and finishing | Post houses | Pipeline fit with AI assets |

| Blackmagic | NLE/color with AI | Editors, colorists | Timeline-aware assistants |

| OpenAI | Multimodal models | Enterprises | Text-to-video research & APIs |

| AWS / Azure | Cloud inference & render | Studios | Reference architectures & cost ops |

- January 2026 — Industry briefings from NVIDIA and Adobe emphasize rights-aware workflows and end-to-end pipelines in media production, per company newsroom updates.

- January 2026 — Cloud reference architectures for media rendering and AI inference highlighted by AWS and Microsoft Azure, aligning with enterprise procurement and governance.

- January 2026 — Analyst outlooks from Gartner and Forrester underscore provenance, security, and integration as primary adoption drivers for AI video tools.

Disclosure: BUSINESS 2.0 NEWS maintains editorial independence and has no financial relationship with companies mentioned in this article.

Sources include company disclosures, regulatory filings, analyst reports, and industry briefings.

Related Coverage

About the Author

Marcus Rodriguez

Robotics & AI Systems Editor

Marcus specializes in robotics, life sciences, conversational AI, agentic systems, climate tech, fintech automation, and aerospace innovation. Expert in AI systems and automation

Frequently Asked Questions

What defines AI Film Making in 2026 for enterprise buyers?

AI Film Making in 2026 centers on multimodal models and workflow integrations that augment pre-visualization, editing, and finishing. Enterprises emphasize rights-aware models and provenance, using content credentials from initiatives like C2PA and tools from Adobe, Autodesk, and Blackmagic Design. Deployments increasingly run on NVIDIA GPUs via AWS and Azure, with model endpoints from OpenAI and Google. The focus is on governance, interoperability with NLEs, and measurable time savings without compromising creative control or compliance.

Which vendors are most relevant for production-grade deployments?

Creative suites from Adobe, Autodesk, and Blackmagic remain core for editing and finishing, while text-to-video and generative editing from Runway, Pika, and OpenAI drive experimentation. NVIDIA provides the hardware and software backbone for training and inference, accessed through AWS and Microsoft Azure. Google contributes multimodal research and APIs, and Synthesia offers avatar-based workflows for localization. Selection often hinges on content credentials, security attestations, and integration with asset management systems.

How are enterprises integrating AI tools into existing pipelines?

Organizations are embedding generative features directly into NLE timelines and asset managers, enabling timeline-aware edits and automated tasks like rotoscoping or rough cuts. Cloud reference architectures from AWS and Azure standardize GPU access, storage, and orchestration, while audit trails and content credentials maintain compliance. Teams adopt human-in-the-loop review cycles, pairing Runway or OpenAI generation with Adobe Premiere Pro or DaVinci Resolve for final polish, thus preserving creative sign-off and version control.

What are the main risks and how are they mitigated?

Key risks include rights concerns around training data, provenance of outputs, and model hallucinations that degrade production quality. Mitigations focus on rights-cleared model sources (e.g., Adobe Firefly’s approach), embedded content credentials via C2PA, and deployment on secure, compliant cloud infrastructure. Governance frameworks set approval gates and watermarking policies, while vendors supply indemnification and audit logs. Analysts emphasize aligning model choice to intended use, especially for broadcast or theatrical releases.

What developments should executives watch through 2026?

Executives should track improvements in spatial-temporal coherence for text-to-video, advances in timeline-aware editability, and tighter coupling of content credentials with asset managers. They should also monitor cloud economics across GPU generations and reference architectures from AWS and Azure. Analyst outlooks from Gartner and Forrester point to governance and integration as decisive factors for scale, while vendor roadmaps from Adobe, NVIDIA, and OpenAI highlight end-to-end pipelines and rights-aware workflows as enterprise priorities.