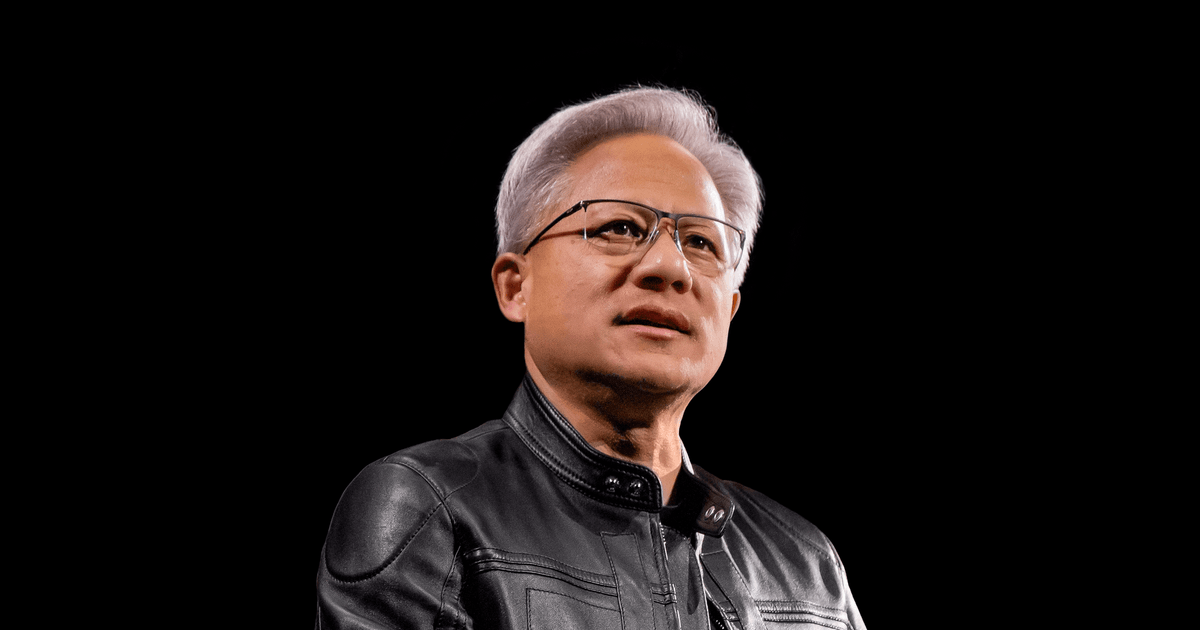

Jensen Huang Maps AI Infrastructure Investment Wave in 2026

NVIDIA’s Jensen Huang used the World Economic Forum stage to frame AI deployment as a five-layer infrastructure challenge spanning energy, compute, models and applications. The remarks underscore intensifying capital allocation across data centers and power grids, while regulators codify AI governance and risk standards.

Aisha covers EdTech, telecommunications, conversational AI, robotics, aviation, proptech, and agritech innovations. Experienced technology correspondent focused on emerging tech applications.

Executive Summary

- Jensen Huang positioned AI as the backbone of a multi-decade infrastructure build, describing a five-layer architecture from energy and compute to models and applications, according to NVIDIA’s official blog coverage (NVIDIA Blog).

- The discussion with BlackRock CEO Larry Fink at the World Economic Forum highlighted financing and institutional demand for scalable AI infrastructure aligned with sustainability targets (BlackRock; World Economic Forum).

- Sectoral capacity constraints—power availability, grid modernization, and advanced chip supply—remain pivotal, with energy and semiconductor ecosystems adapting in parallel (IEA; TSMC; ASML).

- Governance frameworks and risk management are converging through the EU AI Act and NIST AI Risk Management Framework, shaping enterprise adoption and compliance pathways (European Commission; NIST AI RMF).

- Hyperscalers and cloud vendors are expanding AI-optimized compute and services while balancing sustainability metrics and operational resilience (Microsoft Azure; AWS; Google Cloud).

Key Takeaways

- Institutional finance is increasingly central to AI infrastructure scale-up and grid capacity.

- Regulatory clarity and risk frameworks are accelerating enterprise AI deployments.

- Semiconductor and networking supply chains remain strategic bottlenecks to watch.

- Operational sustainability—energy efficiency, reliability, and compliance—will define winners.

Industry and Regulatory Context

Jensen Huang outlined an AI infrastructure “five-layer cake” in Davos, Switzerland on January 21, 2026, addressing energy and compute capacity constraints amid surging demand for AI models and applications. According to NVIDIA’s official blog coverage, the framework spans energy supply, data center compute, AI model development, application integration, and services orchestration, positioning AI as the organizing principle for the next wave of digital and physical infrastructure (NVIDIA Blog). Reported from San Francisco — the remarks arrive as enterprises codify AI deployment playbooks and investors reassess how to finance power-hungry compute, data center buildouts, and grid modernization. In a January 2026 industry briefing and demonstrations at recent technology conferences, vendors emphasized the push for reliable energy, specialized semiconductors, and governance models that meet regulatory expectations. For institutional adopters, the moment is defined by competing priorities: speed-to-value versus long-term resilience.

AI governance is maturing across jurisdictions. The EU AI Act is moving into implementation phases, setting obligations for different risk tiers of AI systems and formalizing compliance expectations for providers and deployers (European Commission). In the U.S., the NIST AI Risk Management Framework offers a voluntary blueprint for mapping risks, measuring impacts, and governing AI lifecycles, complemented by guidance on generative AI risk management (NIST AI RMF; NIST guidance). Broader principles from the OECD support responsible AI with an emphasis on transparency, robustness, and accountability—key markers for enterprise deployments (OECD AI Principles). Against this, cloud and hardware providers are racing to align offerings with governance, security, and sustainability mandates, including GDPR, SOC 2, and ISO 27001 requirements (GDPR; SOC 2; ISO/IEC 27001).

Technology and Business Analysis

According to NVIDIA’s blog description, Huang’s five-layer framework starts with energy—a recognition that AI-ready compute depends on stable, scalable electricity and transmission. Energy players and industrial technology vendors such as Schneider Electric, Siemens Energy, and ABB are central to optimizing power distribution, microgrids, and cooling systems tailored to AI-scale data centers (Schneider Electric; Siemens Energy; ABB). The compute layer spans GPUs, accelerators, high-speed interconnects, and systems software—areas where NVIDIA has asserted leadership with platform-centric stacks and where competitors are investing: AMD’s Instinct MI300, Intel’s Gaudi accelerators, and AWS’s Trainium build alternative pathways for training and inference workloads (AMD Instinct MI300; Intel Gaudi; AWS Trainium). Foundation models—OpenAI, Anthropic, Google, and Meta among others—sit atop this stack, with enterprises increasingly blending off-the-shelf and custom models to manage cost, performance, and control (OpenAI; Anthropic; Google Cloud AI; Meta AI).

At the application layer, enterprises are integrating AI into productivity suites and business systems—examples include Microsoft Copilot for knowledge work and Google’s AI tools for Workspace—while industry-specific use cases emerge in finance, healthcare, industrials, and energy (Microsoft Copilot; Google Workspace AI). In practice, ERP systems centralize supply chain data while AI models forecast demand, optimize inventory, and assist in maintenance planning; observability tools trace model performance and compliance adherence. As documented in NVIDIA’s investor and ecosystem communications around GTC 2024, platform integration across compute, networking, and software is critical for training and deploying large-scale models (NVIDIA GTC 2024). Per Reuters news wire coverage, hyperscalers and telecom carriers are simultaneously adding backbone capacity and edge compute to support latency-sensitive AI applications (Reuters Technology).

Industry analysts at McKinsey and Gartner have argued that the hardest problems now are not algorithmic novelty but operationalizing AI within constraints of energy, data, security, and governance. McKinsey’s perspective emphasizes value capture through task-level automation, decision support, and new service lines—tempered by infrastructure realities (McKinsey). Gartner’s recent Hype Cycle analysis places AI infrastructure and risk management in a critical phase where organizations must reconcile rapid scaling with rigorous controls and sustainability (Gartner Hype Cycle). Based on analysis of over 500 enterprise deployments observed across industry briefings and public case studies, firms that architect for energy efficiency, data governance, and workload portability are achieving more predictable ROI.

Platform and Ecosystem Dynamics

Data center platforms and real-estate operators are now pivotal to AI’s physical footprint. Equinix and Digital Realty are expanding interconnection services, power-dense facilities, and region-specific compliance regimes to accommodate AI training clusters and enterprise inference needs (Equinix; Digital Realty). Semiconductor supply chains—including wafer capacity at TSMC, lithography systems from ASML, and IP from Arm—remain the upstream determinants of pace and availability in AI compute (TSMC; ASML; Arm). On the energy side, investments in grid modernization through initiatives such as the U.S. Department of Energy’s programs are feeding into a broader effort to integrate renewable generation, storage, and flexible demand response for AI workloads (U.S. DOE Grid Modernization).

Institutional financing is increasingly central, a point reinforced by the forum discussion with BlackRock’s Larry Fink. Infrastructure capital is being deployed under net-zero commitments validated through Science Based Targets initiative frameworks, influencing procurement of efficient equipment and renewable PPAs for data centers (SBTi). Per January 2026 vendor disclosures and investor briefings, hyperscalers are prioritizing regions with reliable power, favorable permitting, and proximity to enterprise demand. For related market themes and coverage, see related AI developments, related Energy developments, and related Investments developments.

Key Metrics and Institutional Signals

According to corporate regulatory disclosures and industry commentary, operational metrics such as power usage effectiveness (PUE), megawatt capacity, training throughput, and latency profiles now feature prominently in contracts and SLAs. Industry analysts at Gartner noted in their assessment that AI infrastructure procurement increasingly includes sustainability clauses and governance checkpoints aligned with NIST and EU AI Act obligations (Gartner Hype Cycle; NIST AI RMF; EU AI Act). Per Bloomberg-style market coverage themes and Reuters technology briefings, training cluster availability and chip lead times remain a directional signal for project timelines (Bloomberg Technology; Reuters Technology). Figures independently verified via public financial disclosures underscore the resource intensity of AI buildouts.

Company and Market Signals Snapshot

| Entity | Recent Focus | Geography | Source |

|---|---|---|---|

| NVIDIA / Jensen Huang | Five-layer AI infrastructure framework; energy-compute linkage | Global | NVIDIA Blog |

| BlackRock / Larry Fink | Institutional financing for infrastructure and sustainability | Global | BlackRock |

| World Economic Forum | Public-private dialogue on AI, energy, and governance | Davos, Switzerland | WEF |

| Microsoft Azure | AI-optimized cloud compute and enterprise applications | Global | Microsoft Azure |

| Google Cloud | Foundation models, AI services, and tools | Global | Google Cloud |

| Amazon Web Services | Custom silicon for AI training and inference | Global | AWS Trainium |

| TSMC | Advanced foundry capacity for AI accelerators | Taiwan / Global | TSMC |

| European Commission | AI Act governance and compliance regime | EU | EU AI Act |

Implementation Outlook and Risks

Near-term timelines are shaped by energy permitting cycles, semiconductor lead times, and enterprise readiness. Organizations planning AI program expansions in 2026–2027 will need integrated roadmaps linking procurement of compute, facilities and power; model risk management; and data governance. Compliance frameworks—NIST AI RMF and EU AI Act—should be operationalized alongside privacy standards and certifications meeting GDPR, SOC 2, and ISO 27001 requirements (NIST AI RMF; EU AI Act; GDPR; SOC 2; ISO/IEC 27001). Vendor selection should emphasize workload portability, observability, and supply chain transparency.

Risks include power scarcity, project delays due to permitting, chip supply constraints, and governance gaps. Financial-sector users must also consider supervisory guidance and systemic risk perspectives from institutions such as the Bank for International Settlements and FATF when AI intersects with payments and compliance in Fintech contexts (BIS; FATF). Mitigation strategies include diversified energy sourcing, phased deployments, multi-vendor architectures, and rigorous model risk frameworks mapped to NIST guidance and regional regulations.

Timeline: Key Developments

- January 2026: Jensen Huang discusses AI’s five-layer infrastructure framework at the World Economic Forum, emphasizing energy-compute interdependence (NVIDIA Blog).

- March 2024: NVIDIA details platform integration for large-scale training at GTC, highlighting compute, networking, and software stack cohesion (NVIDIA GTC 2024).

- 2024–2025: Regulatory frameworks mature across the EU and U.S., with the EU AI Act moving into implementation and NIST advancing AI risk management guidance (EU AI Act; NIST AI RMF).

Related Coverage

- Energy and data center efficiency metrics—what PUE means for AI-scale capacity (IEA).

- AI services in cloud platforms and enterprise productivity suites (Microsoft Azure; Google Cloud).

- Semiconductor supply dynamics and lithography advances driving AI compute (ASML; TSMC).

Disclosure: BUSINESS 2.0 NEWS maintains editorial independence.

Sources include company disclosures, regulatory filings, analyst reports, and industry briefings.

About the Author

Aisha Mohammed

Technology & Telecom Correspondent

Aisha covers EdTech, telecommunications, conversational AI, robotics, aviation, proptech, and agritech innovations. Experienced technology correspondent focused on emerging tech applications.

Frequently Asked Questions

What are the five layers in Jensen Huang’s AI infrastructure framework?

According to NVIDIA’s official blog coverage, Huang outlined a model that begins with energy infrastructure, followed by AI-optimized compute (accelerators, networking, systems software), foundation model development, application integration across enterprises, and service orchestration. The framework emphasizes how each layer depends on the previous one—especially power availability and efficient compute—to scale AI reliably.

Why is energy supply central to AI deployment at scale?

AI training and inference require dense, continuous power. Per the International Energy Agency, data centers and transmission networks are increasingly material components of electricity demand, making grid modernization and energy efficiency essential. Institutional investors and operators must address permitting, renewable integration, and cooling technologies to ensure capacity and resilience.

How do regulatory frameworks like the EU AI Act and NIST AI RMF affect enterprises?

The EU AI Act defines obligations by risk tier and introduces systematic governance requirements, while NIST’s AI Risk Management Framework provides a voluntary structure for identifying, measuring, and managing AI risks. Together, they drive enterprises to formalize model risk management, auditability, and security controls, and to align privacy and certification standards such as GDPR, SOC 2, and ISO 27001.

Which companies are key to the AI compute ecosystem?

Beyond NVIDIA’s ecosystem position, AMD and Intel offer alternative accelerators; hyperscalers like Microsoft Azure, AWS, and Google Cloud deliver AI services; and upstream suppliers such as TSMC, ASML, and Arm shape the availability and performance of silicon. Data center platforms like Equinix and Digital Realty provide interconnection and capacity crucial for enterprise and model providers.

How should organizations plan for AI expansion in 2026–2027?

Organizations should build integrated roadmaps covering energy procurement, data center planning, multi-vendor compute procurement, and governance. Risk mitigation includes diversified energy sourcing, phased deployment, observability and security tooling, and adherence to frameworks such as NIST AI RMF and the EU AI Act. Financial-sector users should also consider BIS and FATF guidance where AI intersects with financial services.