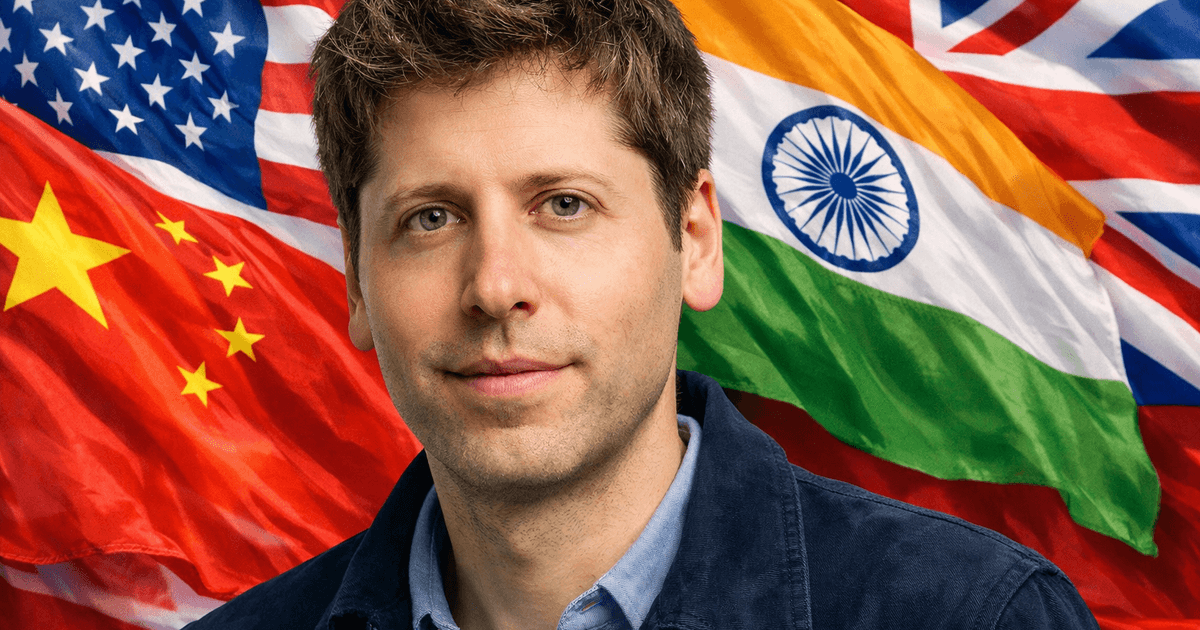

Superintelligence AI Is Possible by 2028: Sam Altman

OpenAI CEO Sam Altman declared at the India AI Impact Summit 2026 that early versions of true superintelligence could arrive as soon as the end of 2028 — a prediction backed by trillion-dollar infrastructure investments, accelerating scaling laws, and converging timelines from Anthropic, Google DeepMind, and xAI that has reignited the global debate on AI safety, governance, and the future of human civilisation.

Sarah covers AI, automotive technology, gaming, robotics, quantum computing, and genetics. Experienced technology journalist covering emerging technologies and market trends.

Executive Summary

LONDON, February 20, 2026 — In what may prove to be one of the most consequential statements made at a major technology forum this decade, OpenAI CEO Sam Altman declared at the India AI Impact Summit 2026 that early versions of true superintelligence could arrive as soon as the end of 2028 (Altman 2026). The claim — simultaneously audacious, technically grounded, and deeply unsettling to many in the scientific community — has reignited a global conversation about the pace of AI development, its societal implications, and whether humanity is remotely prepared for the transition. Whether one regards Altman as a visionary or a hyper-optimist, the forces driving his prediction are real, measurable, and accelerating. As we reported in Top 10 Neuroscience Companies by Market Cap to Watch in 2026, breakthroughs in brain-computer interfaces and AI-neuroscience convergence are already redrawing the boundaries of what machines can achieve.

Key Takeaways

- Sam Altman predicts early superintelligence by late 2028, with aggregate data centre capacity exceeding collective human cognition.

- Over $1 trillion in AI infrastructure investment has been committed globally for 2025 to 2028, creating self-reinforcing momentum across competing firms and nations.

- Anthropic CEO Dario Amodei, Google DeepMind CEO Demis Hassabis, and xAI founder Elon Musk offer converging but distinct timelines ranging from 2026 to 2035.

- Scaling laws — the empirical backbone of the industry's optimism — face potential bottlenecks in data availability and energy infrastructure.

- The alignment problem remains unsolved, and international governance frameworks are struggling to keep pace with development timelines.

The Architecture of Altman's 2028 Prediction

Altman's prediction rests on a convergence of several specific technical and infrastructural milestones that OpenAI is actively working toward. Speaking in New Delhi, he described a three-part vision: first, that early superintelligence would emerge from continued scaling of reasoning models; second, that by late 2028 the aggregate "intellectual capacity" housed within global data centres would exceed that of every human mind on Earth combined; and third, that OpenAI aims to have a "legitimate AI researcher" — a system capable of autonomously executing large-scale scientific research projects — operational well before that date (Wired 2025).

The third element is particularly significant. An AI that can autonomously conduct research is not merely a tool — it is a force multiplier for its own development. If such a system can improve its own architecture, identify training efficiencies, and run experiments without human direction, the pace of capability improvement could enter a recursive loop that makes linear projections meaningless. OpenAI researchers have described this scenario internally as the "bootstrapping threshold" — the point at which AI development partially removes itself from the human bottleneck (OpenAI Research 2025).

"I think it's possible that we will have superintelligence — not just AGI — by 2028. These won't be the systems we have today. They will be categorically different." — Sam Altman, India AI Impact Summit 2026 "We want to deploy an AI that can essentially be a virtual co-worker — someone who can autonomously do research, write code, run experiments. That's not science fiction. That's what we're building toward right now." — Sam Altman, Wired, 2025The data centre prediction also deserves closer scrutiny. Altman's claim that computational capacity will surpass collective human cognition by late 2028 is not a metaphor — it is a quantitative projection grounded in semiconductor roadmaps and investment announcements. As of early 2026, NVIDIA's Blackwell architecture and its successors are delivering computational throughput that doubles roughly every 18 months. With hyperscalers such as Microsoft Azure, Google Cloud, and Amazon Web Services all running multi-hundred-billion-dollar AI infrastructure buildouts, the raw compute available for AI inference and training is expanding at a pace that no previous technological era has witnessed.

Investment and Infrastructure: The Fuel Behind the Race

No serious analysis of the 2028 superintelligence thesis can ignore the extraordinary financial forces accelerating it. Meta CEO Mark Zuckerberg announced in early 2025 that his company would invest approximately $60 to $65 billion in AI infrastructure in that year alone, with total commitments over the following three years potentially reaching $600 billion when including ecosystem partners and supply chain investments (Meta Q1 2025 Earnings Call). Microsoft has committed over $80 billion to AI data centres for 2025, and Alphabet announced capital expenditures of over $75 billion for AI-related infrastructure in the same period. These are not R&D bets — these are civilisational wagers.

"We're going to build the infrastructure. We're going to win this. The risk is not spending too much — the risk is spending too little and watching someone else define the future." — Mark Zuckerberg, Meta Earnings Call, Q1 2025Global AI Infrastructure Investment Commitments — 2025 to 2028

| Organisation | Investment | Period | Focus | Source |

|---|---|---|---|---|

| Meta | $60–65B (2025); ~$600B ecosystem | 2025–2028 | AI data centres, GPU clusters | Meta Q1 2025 Earnings |

| Microsoft | $80B+ | 2025 | Azure AI infrastructure | Reuters 2025 |

| Alphabet / Google | $75B+ | 2025 | AI compute, TPU clusters | Alphabet Investor Relations |

| OpenAI (Stargate) | $500B (joint venture) | 2025–2029 | Superintelligence-class training | Financial Times 2025 |

| Saudi Arabia PIF | $100B+ | 2025–2030 | National AI infrastructure | Bloomberg 2025 |

| UAE MGX | $100B+ | 2025–2030 | AI compute, sovereign AI | Reuters 2025 |

Saudi Arabia's Public Investment Fund, the UAE's MGX, and South Korea's national AI initiative have committed additional hundreds of billions, suggesting that the race for artificial superintelligence has become a matter of national economic strategy as much as corporate competition. Altman's own Stargate project — a joint venture with SoftBank and Oracle backed initially by $500 billion — represents perhaps the single largest coordinated infrastructure investment in technological history, focused entirely on building the physical substrate required for superintelligence-class models (Financial Times 2025).

The implications of this investment cascade are twofold. First, it creates self-reinforcing momentum — each company's commitment pressures competitors to match it, ensuring that slowdowns in one firm's pace are compensated by acceleration elsewhere. Second, it means the 2028 timeline is not dependent on a single organisation achieving a breakthrough; it reflects a broad industrial bet that the threshold will be crossed within that window regardless of who crosses it first.

Dario Amodei and the Anthropic Perspective: AGI First, Then ASI

Altman's rival and former colleague, Anthropic co-founder and CEO Dario Amodei, has offered a slightly different but convergent timeline. In a widely cited 2025 blog post titled "Machines of Loving Grace", Amodei argued that transformative AI — systems that could compress decades of scientific progress into a few years — could arrive as early as 2026 or 2027 (Amodei 2025). Superintelligence, in his framing, would follow within a short period thereafter.

"I think there's a real chance that we'll have AI systems that are genuinely smarter than humans at most tasks within the next two to three years. This is both a remarkable opportunity and a profound risk." — Dario Amodei, Anthropic, 2025Amodei's framing is notable for its emphasis on the consequences of such systems rather than the mechanics of their creation. Anthropic was founded precisely because its team believed that advanced AI posed existential risks if developed without sufficient safety infrastructure. Yet Amodei has not used the imminence of superintelligence as an argument to slow down — rather, he has consistently argued that safety-conscious organisations must reach the frontier first to ensure the technology is developed responsibly. This creates a philosophical paradox that sits at the heart of the modern AI landscape: the safest path may require racing toward the very thing you fear. Anthropic's Constitutional AI methodology and its interpretability research programme represent the most rigorous public attempts to make powerful AI systems comprehensible and steerable.

Competing Voices: Musk, Hassabis, and the Spectrum of Expert Opinion

The landscape of expert opinion on AGI and ASI timelines is far from monolithic. xAI founder Elon Musk predicted in late 2025 that AI systems would surpass the cognitive capabilities of any single human being by the end of 2026 (Musk 2025). Musk's framing differs from Altman's in that it focuses on individual human comparison rather than collective human intelligence — a meaningful distinction, but one that points in the same directional trajectory.

"By the end of next year, AI will be smarter than any single human. Smarter than me, smarter than you, smarter than everyone. We need to be ready for that." — Elon Musk, xAI Event, November 2025Google DeepMind CEO Demis Hassabis offers a more measured, though still historically unprecedented, estimate. In multiple interviews throughout 2024 and 2025, Hassabis placed human-level AI — what many would call AGI — in the range of 2030 to 2035, noting that while progress has been extraordinary, the final stretch of capability development is likely to be the most technically demanding (MIT Technology Review 2025). DeepMind's work on AlphaFold, which solved a 50-year grand challenge in structural biology, and AlphaGeometry, which achieved olympiad-level mathematical reasoning, represents perhaps the most rigorous empirical evidence that AI is approaching or matching human-expert performance in specific cognitive domains.

"I believe we'll get to AGI within this decade, maybe sooner. But I'm cautious about overpromising timelines. This is genuinely hard, and the last mile is often the hardest." — Demis Hassabis, MIT Technology Review, 2025Yoshua Bengio, one of the founding fathers of modern deep learning and a recipient of the Turing Award, has taken a strikingly different tone. While acknowledging rapid progress, Bengio has warned that the race to superintelligence is being conducted recklessly, and that alignment — ensuring AI systems behave in accordance with human values — may be the hardest unsolved problem in science (Bengio 2025). He has called for international governance frameworks analogous to those governing nuclear weapons, a position that places him at odds with most Silicon Valley leadership on the question of pace.

Expert Predictions on AGI and Superintelligence Timelines

| Expert | Organisation | AGI Timeline | ASI Timeline | Key Caveat | Source |

|---|---|---|---|---|---|

| Sam Altman | OpenAI | 2025–2027 | Late 2028 | Dependent on reasoning model scaling and data centre build-out | India AI Summit 2026 |

| Dario Amodei | Anthropic | 2026–2027 | 2028–2029 | Safety infrastructure must precede deployment | Machines of Loving Grace, 2025 |

| Elon Musk | xAI | End of 2026 | Not specified | Focuses on surpassing individual human intelligence | xAI Event, Nov 2025 |

| Demis Hassabis | Google DeepMind | 2030–2035 | Post-2035 | "Last mile" is hardest; cautions against overpromising | MIT Technology Review, 2025 |

| Yoshua Bengio | Mila / University of Montreal | Uncertain | Uncertain | Alignment unsolved; calls for nuclear-style governance | Montreal AI Debate, 2025 |

| Gary Marcus | Independent / NYU | Decades away | Far future | Architectural paradigm shift needed; scaling alone insufficient | Montreal AI Debate, 2025 |

| Ilya Sutskever | Safe Superintelligence Inc. | Near-term | Within decade | Scaling hypothesis remains reliable empirical guide | NeurIPS 2024 |

Scaling Laws: The Engine of Progress

Underlying nearly every optimistic prediction about superintelligence timelines are the so-called scaling laws of deep learning, first formally documented by OpenAI researchers Jared Kaplan, Sam McCandlish, and colleagues in their landmark 2020 paper (Kaplan et al. 2020). The core finding was deceptively simple: the capabilities of large language models improve predictably and reliably as a function of three variables — the amount of compute used for training, the size of the model (number of parameters), and the size of the training dataset. Critically, these improvements follow smooth power-law curves rather than the diminishing returns typical of most engineering endeavours.

This empirical regularity has become the backbone of the industry's optimism. If scaling laws continue to hold — and there is vigorous debate about whether they will — then the models of 2027 or 2028 will be to today's GPT-4 class systems roughly what GPT-4 was to GPT-2: categorically more capable, qualitatively different, and surprising in their emergent behaviours. OpenAI's o3 model, released in early 2025, demonstrated that test-time scaling — using more compute during inference to "think longer" — can yield capability gains that complement traditional training-time scaling, effectively opening a second dimension of improvement.

"The scaling hypothesis remains our most reliable empirical guide. We have no strong theoretical reason to believe it will break down soon, and strong empirical evidence that it hasn't broken down yet." — Ilya Sutskever, NeurIPS 2024However, some researchers point to potential bottlenecks that could disrupt clean scaling trajectories. Data availability is one critical constraint — internet-derived training data may be approaching exhaustion, leading to investment in synthetic data generation, scientific literature, and multimodal datasets from video and sensor streams. Energy availability represents another. Training a frontier AI model in 2025 consumes electricity equivalent to a small city's annual usage, and the infrastructure required to power the 2028 generation of models will demand grid-scale energy solutions that are not yet in place across most of the world (International Energy Agency 2025).

The Sceptic's Case: Why 2028 May Be Wishful Thinking

Not everyone accepts the 2028 narrative. Brent Smolinski, IBM's CTO for AI platforms, has argued publicly that claims of imminent superintelligence are "totally exaggerated" and disconnected from the actual technical realities of current systems (Smolinski 2025). His critique centres on the observation that today's AI systems, however impressive in narrow benchmarks, lack robust common sense, true causal reasoning, and the ability to reliably handle high-context, long-horizon tasks — precisely the capabilities that distinguish human intelligence from pattern matching at scale.

Gary Marcus, cognitive scientist and longstanding AI sceptic, has catalogued numerous failure modes of current large language models — hallucination, brittleness, lack of genuine understanding, and susceptibility to adversarial prompts — and argued that these are not engineering bugs to be patched but fundamental architectural limitations that no amount of additional compute will resolve (Marcus 2025). In Marcus's view, the path to AGI likely requires not just more of the same, but a paradigmatic shift in how AI systems are designed — incorporating symbolic reasoning, causal models, and embodied experience in ways that current transformer architectures do not support.

"We keep benchmarking systems on tests that we then realise they've over-fit to. Every time we make a harder test, the systems struggle. That tells you something important about what they actually understand." — Gary Marcus, AI Debate, Montreal, 2025There is also the question of definition. "Superintelligence" is not a precisely defined technical term, and the differences between how Altman, Amodei, and Hassabis use it are significant. If superintelligence means "better than any single human at most cognitive tasks," the 2026–2028 window is genuinely plausible given current trajectory. If it means "a system with recursive self-improvement capabilities that could reshape civilisation within months of deployment," the engineering and safety challenges involved are of an entirely different order of magnitude.

Safety, Governance, and the Race Nobody Wants to Lose

Perhaps the most consequential dimension of the 2028 debate is not whether superintelligence will arrive, but whether humanity will be ready when it does. The alignment problem — ensuring that a superintelligent system pursues goals that are genuinely beneficial to humanity rather than goals that merely appear to satisfy human objectives in the short term — remains unsolved, and many researchers argue it is the most important scientific and philosophical challenge of our era (80,000 Hours 2025).

OpenAI has established a Preparedness team and published a Safety Framework that outlines how it plans to evaluate and contain potentially dangerous AI capabilities. Anthropic has built interpretability research into its core technical roadmap, attempting to develop tools that can examine the internal representations of neural networks and identify potentially deceptive or harmful goal structures. DeepMind's safety team has published influential theoretical work on reward modelling, scalable oversight, and debate as mechanisms for aligning powerful AI systems.

At the international level, the UK AI Safety Institute — established following the Bletchley Park AI Safety Summit in November 2023 — has begun developing standardised evaluation frameworks for frontier AI models. The United States, European Union, and China have each advanced AI governance frameworks, though with markedly different emphases: the EU's AI Act focuses on risk classification and prohibited uses; the US executive order on AI emphasises security evaluation requirements for frontier models; China's regulations focus on content governance and national security applications.

"The critical question is not whether we build superintelligence but whether we build it in a way that keeps humans in a position of meaningful oversight. Once you lose that leverage, you may not get it back." — Stuart Russell, University of California Berkeley, 2025What 2028 Would Actually Mean

If Altman's prediction proves accurate — even approximately — the implications extend far beyond the technology sector. An AI system capable of autonomously conducting research at the frontier of human knowledge could compress decades of pharmaceutical development into months, potentially delivering cures for diseases that currently kill millions annually. It could design new materials with properties that enable breakthroughs in clean energy, solve protein-folding and drug-target identification problems across entire disease families simultaneously, and develop mathematical proofs in domains that human mathematicians have spent generations failing to crack.

Economically, the arrival of systems that can perform the cognitive work of multiple expert humans across virtually all white-collar domains would represent the largest single disruption to labour markets in recorded history. McKinsey Global Institute estimates suggest that AI automation could affect between 60 and 80 percent of professional tasks within a decade of AGI arrival — not necessarily eliminating jobs but radically restructuring what humans contribute to them (McKinsey 2024). The distributional consequences depend almost entirely on policy choices that most governments have not yet seriously begun to formulate.

Geopolitically, the nation or alliance that first achieves and controls superintelligence gains an advantage in economic productivity, scientific discovery, cyber capabilities, and strategic intelligence that could prove irreversible. This reality is not lost on policymakers in Washington, Beijing, Brussels, or New Delhi, and it explains why governments are treating AI development not merely as an industrial policy question but as a matter of national security.

Why This Matters

The 2028 superintelligence thesis is not an abstract thought experiment — it is a planning horizon with concrete implications for every sector of the global economy. For business leaders, it means that competitive advantages built on human intellectual capital may have a shorter shelf life than previously assumed. For policymakers, it means that governance frameworks must be designed not for the AI systems of today but for systems that may be orders of magnitude more capable within 24 months. For researchers in safety and alignment, it means the window for developing robust oversight mechanisms is narrowing faster than many in the field had anticipated. And for the broader public, it means that the most transformative technology in human history may arrive not in a distant future but within a period shorter than a typical election cycle.

Conclusion: A Countdown the World Must Take Seriously

Sam Altman's prediction of superintelligence by 2028 may prove premature. The history of technology is littered with confident predictions that overestimated the pace of transformative change. But the extraordinary confluence of investment, compute scaling, algorithmic progress, and genuine capability improvements in current systems suggests that even if the precise date is wrong, the general trajectory is not. The world is moving toward systems of unprecedented cognitive capability at a speed that outpaces our institutional ability to understand, regulate, and prepare for them.

The 2028 thesis is best understood not as a certain forecast but as a planning horizon — a date by which responsible actors in government, industry, academia, and civil society should have robust frameworks in place, not for the world as it is, but for the world as it is rapidly becoming. Altman, Amodei, Hassabis, and even their critics are united in one belief: whatever the exact date, this transition is no longer a matter for the speculative imagination. It is an engineering project well underway, funded at civilisational scale, and operating on a timeline that the rest of the world can no longer afford to ignore.

Bibliography

- Altman, S. (2026) 'Superintelligence prediction', India AI Impact Summit 2026, New Delhi, February 2026. Available at: https://indiaaisummit.com.

- Altman, S. (2025) Interview with Wired. Available at: https://www.wired.com.

- Amodei, D. (2025) 'Machines of Loving Grace', Anthropic Blog. Available at: https://darioamodei.com/machines-of-loving-grace.

- Bengio, Y. (2025) Remarks at the Montreal AI Debate, Montreal, 2025. Available at: https://mila.quebec.

- Hassabis, D. (2025) Interview, MIT Technology Review. Available at: https://www.technologyreview.com.

- Kaplan, J., McCandlish, S. et al. (2020) 'Scaling Laws for Neural Language Models', OpenAI. Available at: https://arxiv.org/abs/2001.08361.

- Marcus, G. (2025) Remarks at the Montreal AI Debate, Montreal, 2025. Available at: https://garymarcus.substack.com.

- McKinsey Global Institute (2024) 'The Economic Potential of Generative AI: The Next Productivity Frontier'. Available at: https://www.mckinsey.com.

- Musk, E. (2025) Remarks at xAI Event, November 2025. Available at: https://x.ai.

- Russell, S. (2025) Public lecture, University of California Berkeley. Available at: https://people.eecs.berkeley.edu/~russell/.

- Smolinski, B. (2025) Remarks on superintelligence claims, IBM AI Platforms. Available at: https://www.ibm.com.

- Sutskever, I. (2024) Keynote address, NeurIPS 2024. Available at: https://neurips.cc.

- UK AI Safety Institute (2023–2025) Frontier AI Safety Commitments. Available at: https://www.aisi.gov.uk.

- Zuckerberg, M. (2025) Meta Q1 2025 Earnings Call. Available at: https://investor.fb.com.

- 80,000 Hours (2025) 'Will We Have AGI by 2030?'. Available at: https://80000hours.org.

- International Energy Agency (2025) Electricity 2025 Report. Available at: https://www.iea.org.

About the Author

Sarah Chen

AI & Automotive Technology Editor

Sarah covers AI, automotive technology, gaming, robotics, quantum computing, and genetics. Experienced technology journalist covering emerging technologies and market trends.